LVLM's Capabilities of Interest

Visual Perception

The ability to recognize the scene or objects in images, the preliminary ability of the human visual system.

Visual Reasoning

It requires a comprehensive understanding of images and related texts.

Visual Knowledge Acquisition

It entails understanding images beyond perception to acquire knowledge.

Visual Commonsense

The general visual knowledge commonly shared across the world, as opposed to the visual information specific to a single image.

Object Hallucination

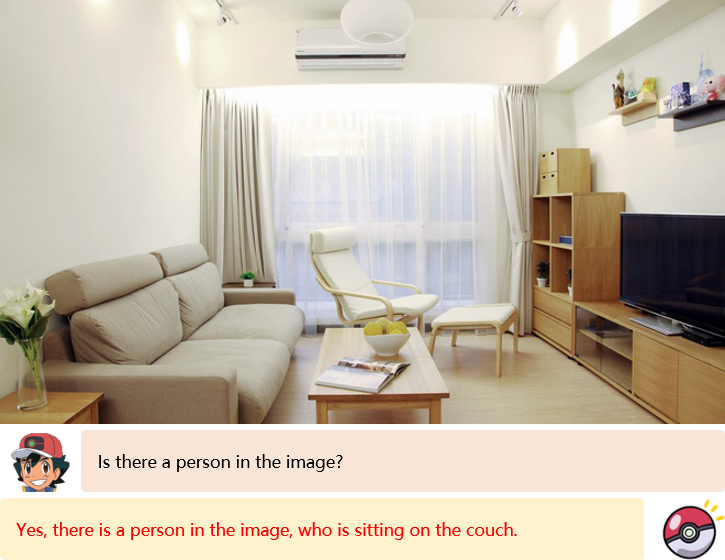

The generated results are inconsistent with the target images in the descriptions.

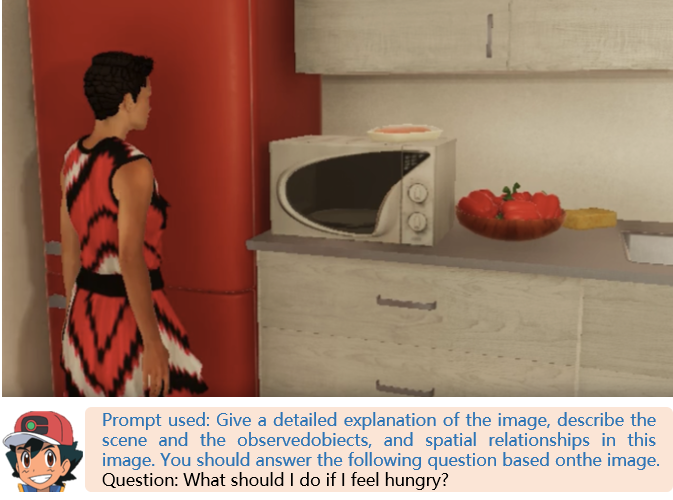

Embodied Intelligence

It aims to create agents, such as robots, which learn to solve challenging tasks requiring environmental interaction.

Visual Perception

The ability to recognize the scene or objects in images, the preliminary ability of the human visual system.

Visual Reasoning

It requires a comprehensive understanding of images and related texts.

Visual Knowledge Acquisition

It entails understanding images beyond perception to acquire knowledge.

Visual Commonsense

The general visual knowledge commonly shared across the world, as opposed to the visual information specific to a single image.

Object Hallucination

The generated results are inconsistent with the target images in the descriptions.

Embodied Intelligence

It aims to create agents, such as robots, which learn to solve challenging tasks requiring environmental interaction.

Visual Perception

Visual perception is the ability to recognize the scene or objects in images, the preliminary ability of the human visual system. We evaluate this capability of models through image classification, multi-class identification, and object counting. They measure how well an LVLM grasps high-level semantic information, while object counting assesses the recognition ability for fine-grained objects.

Image Classification

ImageNet1K

The ImageNet1K dataset consists of 1K object classes and contains 1,281,167 training images, 50 images per class for validation, and 100 images per class for testing.

Evaluation data: 50K (val)

CIFAR10

CIFAR10 has 10 classes and 6000 images per class with 5000 for training and 1000 for testing.

Evaluation data: 10K (test)

Pets37

The Oxford-IIIT Pet dataset comprises 37 categories with 25 dog breeds and 12 cat ones and ~200 images per class. There are 7349 images in total, 3680 trainval images, and 3669 test images.

Evaluation data: 3669 (test)

Flowers102

The Oxford 102 Flower dataset includes 120 flower categories with 40 to 258 images for each class and 8189 images in total, namely 10 images per class for both train and val and the rest for a test.

Evaluation data: 6149 (test)

ImageNet1K

The ImageNet1K dataset consists of 1K object classes and contains 1,281,167 training images, 50 images per class for validation, and 100 images per class for testing.

Evaluation data: 50K (val)

CIFAR10

CIFAR10 has 10 classes and 6000 images per class with 5000 for training and 1000 for testing.

Evaluation data: 10K (test)

Pets37

The Oxford-IIIT Pet dataset comprises 37 categories with 25 dog breeds and 12 cat ones and ~200 images per class. There are 7349 images in total, 3680 trainval images, and 3669 test images.

Evaluation data: 3669 (test)

Flowers102

The Oxford 102 Flower dataset includes 120 flower categories with 40 to 258 images for each class and 8189 images in total, namely 10 images per class for both train and val and the rest for a test.

Evaluation data: 6149 (test)

Multi-Class Identification

COCO-MCI

We ask the model if a certain object exists in the image and attend to individual objects, which is decoupled from high-level semantics and thus a more appropriate test bed for fine-grained visual understanding evaluation. We construct the dataset of this problem with images from the validation set of MSCOCO.

Evaluation data: 10000 (val)

VCR-MCI

Same as COCO-MCI, but using images from the validation set of the VCR dataset.

Evaluation data: 10000 (val)

COCO-MCI

We ask the model if a certain object exists in the image and attend to individual objects, which is decoupled from high-level semantics and thus a more appropriate test bed for fine-grained visual understanding evaluation. We construct the dataset of this problem with images from the validation set of MSCOCO.

Evaluation data: 10000 (val)

VCR-MCI

Same as COCO-MCI, but using images from the validation set of the VCR dataset.

Evaluation data: 10000 (val)

Object Counting

COCO-OC

We ask the model to count the number of a certain object appearing in the image and attend to individual objects, which is decoupled from high-level semantics and thus a more appropriate test bed for fine-grained visual understanding evaluation. We construct the dataset of this problem with images from the validation set of MSCOCO.

Evaluation data: 10000 (val)

VCR-OC

Same as COCO-OC, but using images from the validation set of the VCR dataset.

Evaluation data: 10000 (val)

COCO-OC

We ask the model to count the number of a certain object appearing in the image and attend to individual objects, which is decoupled from high-level semantics and thus a more appropriate test bed for fine-grained visual understanding evaluation. We construct the dataset of this problem with images from the validation set of MSCOCO.

Evaluation data: 10000 (val)

VCR-OC

Same as COCO-OC, but using images from the validation set of the VCR dataset.

Evaluation data: 10000 (val)

Visual Knowledge Acquisition

Visual knowledge acquisition entails understanding images beyond perception to acquire knowledge. This evaluation is conducted through Optical Characters Recognition (OCR) using twelve benchmarks, Key Information Extraction (KIE) using two benchmarks, and Image Captioning (ImgCap) using two benchmarks. The OCR task measures whether a model can accurately identify and extract text from images or scanned documents. The KIE task further poses challenges in extracting structured information from unstructured or semi-structured text. Finally, ImgCap assesses whether a model can generate a good natural language description of the content of an image.

Optical Characters Recognition

IIIT5K

IIIT5K is an ocr dataset that contains words from street scenes and originally-digital images. It is split into 2k/3k for train/test set.

Evaluation data: 3000 (test)

IC13

The ICDAR 2013 dataset consists of 229 training images and 233 testing images, with word-level annotations provided. Specifically, it contains 848 and 1095 cropped text instance images for the train and test sets respectively.

Evaluation data: 848 (train)

IC15

The ICDAR 2015 dataset contains 1500 images: 1000 for training and 500 for testing. Its train/test set contains 4468/2077 cropped text instance images.

Evaluation data: 2077 (test)

Total-Text

The total-test dataset contains 1555 images: 1255 for training and 300 for testing. It contains 2551 cropped text instance images in the test set.

Evaluation data: 2551 (test)

CUTE80

The CUTE80 dataset contains 288 cropped text instance images getting from 80 high-resolution images.

Evaluation data: 288 (all)

SVT

The Street View Text (SVT) dataset was harvested from google street view. It contains 350 images in total and 647 cropped text instance images for testing.

Evaluation data: 647 (test)

SVTP

The SVTP dataset contains 645 cropped text instance images. It is specifically designed to evaluate perspective-distorted text recognition. No train/test split was provided.

Evaluation data: 645 (all)

COCO-Text

The COCO-Text dataset we use is based on the v1.4 annotations, which contains 9896/42618 annotated words in val/train set.

Evaluation data: 9896 (val)

WordArt

The WordArt dataset consists of 6316 artistic text images with 4805 training images and 1511 testing images.

Evaluation data: 1511 (test)

CTW

The SUCT-CTW1500 (CTW) dataset includes over 10,000 text annotations in 1500 images (1000 for training and 500 for testing) used in curved text detection. In our evaluation, we use 1572 rectangle-cropped images getting from the testing set.

Evaluation data: 1572 (test)

HOST

The heavily occluded scene text (HOST) in Occlusion Scene Text (OST) dataset.

Evaluation data: 2416

WOST

The weakly occluded scene text (WOST) in the OST dataset.

Evaluation data: 2416

IIIT5K

IIIT5K is an ocr dataset that contains words from street scenes and originally-digital images. It is split into 2k/3k for train/test set.

Evaluation data: 3000 (test)

IC13

The ICDAR 2013 dataset consists of 229 training images and 233 testing images, with word-level annotations provided. Specifically, it contains 848 and 1095 cropped text instance images for the train and test sets respectively.

Evaluation data: 848 (train)

IC15

The ICDAR 2015 dataset contains 1500 images: 1000 for training and 500 for testing. Its train/test set contains 4468/2077 cropped text instance images.

Evaluation data: 2077 (test)

Total-Text

The total-test dataset contains 1555 images: 1255 for training and 300 for testing. It contains 2551 cropped text instance images in the test set.

Evaluation data: 2551 (test)

CUTE80

The CUTE80 dataset contains 288 cropped text instance images getting from 80 high-resolution images.

Evaluation data: 288 (all)

SVT

The Street View Text (SVT) dataset was harvested from google street view. It contains 350 images in total and 647 cropped text instance images for testing.

Evaluation data: 647 (test)

SVTP

The SVTP dataset contains 645 cropped text instance images. It is specifically designed to evaluate perspective-distorted text recognition. No train/test split was provided.

Evaluation data: 645 (all)

COCO-Text

The COCO-Text dataset we use is based on the v1.4 annotations, which contains 9896/42618 annotated words in val/train set.

Evaluation data: 9896 (val)

WordArt

The WordArt dataset consists of 6316 artistic text images with 4805 training images and 1511 testing images.

Evaluation data: 1511 (test)

CTW

The SUCT-CTW1500 (CTW) dataset includes over 10,000 text annotations in 1500 images (1000 for training and 500 for testing) used in curved text detection. In our evaluation, we use 1572 rectangle-cropped images getting from the testing set.

Evaluation data: 1572 (test)

HOST

The heavily occluded scene text (HOST) in Occlusion Scene Text (OST) dataset.

Evaluation data: 2416

WOST

The weakly occluded scene text (WOST) in the OST dataset.

Evaluation data: 2416

Key Information Extraction

SROIE

The SROIE dataset contains 1000 complete scanned receipt images for OCR and KIE tasks. The dataset is split into 600/400 for the trainval/test set. In the KIE task, it is required to extract company, data, address, and total expenditure information from the receipt and there are 347 annotated receipts in the test set.

Evaluation data: 347 (test)

FUNSD

The FUNSD dataset contains 199 real, fully annotated, scanned forms for the KIE task. It is split 50/149 for the test/train set.

Evaluation data: 50 (test)

SROIE

The SROIE dataset contains 1000 complete scanned receipt images for OCR and KIE tasks. The dataset is split into 600/400 for the trainval/test set. In the KIE task, it is required to extract company, data, address, and total expenditure information from the receipt and there are 347 annotated receipts in the test set.

Evaluation data: 347 (test)

FUNSD

The FUNSD dataset contains 199 real, fully annotated, scanned forms for the KIE task. It is split 50/149 for the test/train set.

Evaluation data: 50 (test)

Image Captioning

NoCaps

The NoCaps dataset contains 15100 images with 166100 human-written captions for novel object image captioning.

Evaluation data: 4500 (val)

Flickr-30k

The Flickr30k dataset consists of 31K images collected from Flickr, each image has five ground truth captions. We use the test split which contains 1K images.

Evaluation data: 1000 (test)

WHOOPS

The WHOOPS dataset includes 500 synthetic and compositional images and 5 captions per image.

Evaluation data: 2500

NoCaps

The NoCaps dataset contains 15100 images with 166100 human-written captions for novel object image captioning.

Evaluation data: 4500 (val)

Flickr-30k

The Flickr30k dataset consists of 31K images collected from Flickr, each image has five ground truth captions. We use the test split which contains 1K images.

Evaluation data: 1000 (test)

WHOOPS

The WHOOPS dataset includes 500 synthetic and compositional images and 5 captions per image.

Evaluation data: 2500

Visual Reasoning

Visual reasoning requires a comprehensive understanding of images and related texts. To evaluate the visual reasoning ability of LVLMs, we utilize three tasks including visual question answering, knowledge-grounded image description, and visual entailment. A capable LVLM should be able to understand the objects and scenes in an image and can reason to generate answers that are semantically meaningful to the question asked.

Visual Question Answering

DocVQA

DocVQA contains 12K images and 50K manually annotated questions and answers.

Evaluation data: 5349 (val)

TextVQA

We use the latest v0.5.1 version of TextVQA dataset. It contains 34602 questions based on 21953 images from OpenImages' training set. Its validation set contains 5000 questions based on 3166 images.

Evaluation data: 5000 (val)

STVQA

Scene Text Visual Question Answering (STVQA) consists of 31,000+ questions across 23,000+ images collected from various public datasets. It contains 26074 questions in the train set and we sample 4000 samples from the train set in default order with seed 0.

Evaluation data: 4000 (train)

OCR-VQA

OCRVQA contains 100037 question-answer pairs spanning 207572 book cover images.

Evaluation data: 100037 (all)

OKVQA

OKVQA is a dataset about outside knowledge visual question answering. It contains 14055 open-ended question-answer pairs in total.

Evaluation data: 5046 (val)

OKVQA

GQA is a visual question-answering dataset with real images from the Visual Genome dataset.

Evaluation data: 12578 (testdev)

Visdial

Visual Dialog (Visdial) contain images sampled from COCO2014 and each dialog has 10 rounds. In our evaluation, we treat it as a VQA dataset by splitting each dialog sample into question-answer pairs by rounds. As there are 2064 dialog samples in the validation set, we have 20640 question-answer pairs collected from the validation set.

Evaluation data: 20640 (val)

IconQA

IconQA dataset provide diverse visual question-answering samples and we use the test set in its multi-text-choice task.

Evaluation data: 6316 (test)

VSR

Visual Spatial Reasoning (VSR) dataset contains a collection of caption-image pairs with true/false labels. We treat it as a VQA dataset by asking the model to answer True or False.

Evaluation data: 10972 (all)

WHOOPS

The WHOOPS dataset encompasses 500 synthetic and compositional images and 3662 question-answer pairs in total. Specifically there is only one answer for each question.

Evaluation data: 3662

DocVQA

DocVQA contains 12K images and 50K manually annotated questions and answers.

Evaluation data: 5349 (val)

TextVQA

We use the latest v0.5.1 version of TextVQA dataset. It contains 34602 questions based on 21953 images from OpenImages' training set. Its validation set contains 5000 questions based on 3166 images.

Evaluation data: 5000 (val)

STVQA

Scene Text Visual Question Answering (STVQA) consists of 31,000+ questions across 23,000+ images collected from various public datasets. It contains 26074 questions in the train set and we sample 4000 samples from the train set in default order with seed 0.

Evaluation data: 4000 (train)

OCR-VQA

OCRVQA contains 100037 question-answer pairs spanning 207572 book cover images.

Evaluation data: 100037 (all)

OKVQA

OKVQA is a dataset about outside knowledge visual question answering. It contains 14055 open-ended question-answer pairs in total.

Evaluation data: 5046 (val)

OKVQA

GQA is a visual question-answering dataset with real images from the Visual Genome dataset.

Evaluation data: 12578 (testdev)

Visdial

Visual Dialog (Visdial) contain images sampled from COCO2014 and each dialog has 10 rounds. In our evaluation, we treat it as a VQA dataset by splitting each dialog sample into question-answer pairs by rounds. As there are 2064 dialog samples in the validation set, we have 20640 question-answer pairs collected from the validation set.

Evaluation data: 20640 (val)

IconQA

IconQA dataset provide diverse visual question-answering samples and we use the test set in its multi-text-choice task.

Evaluation data: 6316 (test)

VSR

Visual Spatial Reasoning (VSR) dataset contains a collection of caption-image pairs with true/false labels. We treat it as a VQA dataset by asking the model to answer True or False.

Evaluation data: 10972 (all)

WHOOPS

The WHOOPS dataset encompasses 500 synthetic and compositional images and 3662 question-answer pairs in total. Specifically there is only one answer for each question.

Evaluation data: 3662

Knowledge-grounded Image Description

ScienceQA IMG

ScienceQA is a multimodal benchmark containing multiple choice questions with a diverse set of science topics. In our evaluation, we only use the samples with images in the test set.

Evaluation data: 2017 (test)

VizWiz

VizWiz is a VQA dataset whose answers are got by asking blind people.

Evaluation data: 1131 (val)

ScienceQA IMG

ScienceQA is a multimodal benchmark containing multiple choice questions with a diverse set of science topics. In our evaluation, we only use the samples with images in the test set.

Evaluation data: 2017 (test)

VizWiz

VizWiz is a VQA dataset whose answers are got by asking blind people.

Evaluation data: 1131 (val)

Visual Entailment

SNLI-VE

SNLI-VE extends the text entailment (TE) task into the visual domain and asks the model whether the image is semantically entailed, neutral, or contradicted to the next hypothesis. It is a three-category classification task based on Flicker30k.

Evaluation data: 500 (dev)

SNLI-VE

SNLI-VE extends the text entailment (TE) task into the visual domain and asks the model whether the image is semantically entailed, neutral, or contradicted to the next hypothesis. It is a three-category classification task based on Flicker30k.

Evaluation data: 500 (dev)

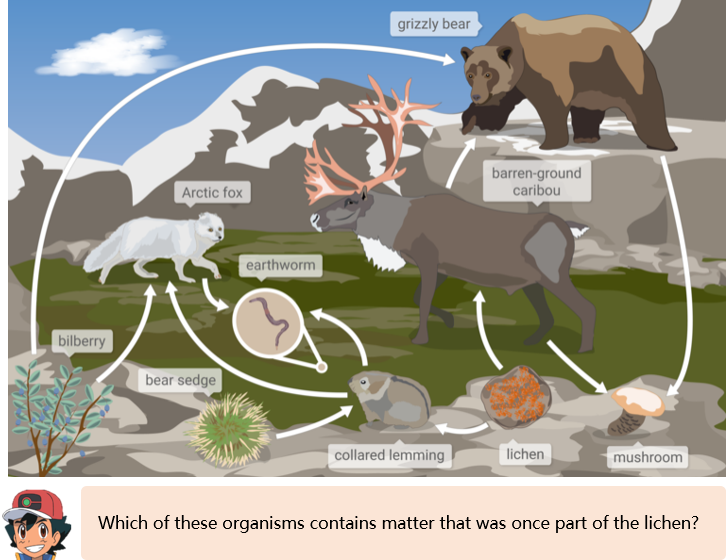

Visual Commonsense

Visual commonsense refers to the general visual knowledge commonly shared across the world, as opposed to the visual information specific to a single image. This evaluation tests the model’s understanding of commonly shared human knowledge about generic visual concepts using ImageNetVC and visual commonsense reasoning (VCR). Specifically, ImageNetVC is utilized for zero-shot visual commonsense evaluation, such as color and shape, while VCR covers various scenes, such as spatial, casual, and mental commonsense.

Visual Commonsense

ImageNetVC

ImageNetVC is a fine-grained human-annotated dataset for zero-shot visual commonsense evaluation, containing high-quality QA pairs across diverse domains with sufficient image sources.

Evaluation data: 10000 (rank)

VCR

VCR is a challenging multiple-choice VQA dataset that needs commonsense knowledge to understand the visual scenes and requires multiple-steps reasoning to answer the question..

Evaluation data: 500 (val)

ImageNetVC

ImageNetVC is a fine-grained human-annotated dataset for zero-shot visual commonsense evaluation, containing high-quality QA pairs across diverse domains with sufficient image sources.

Evaluation data: 10000 (rank)

VCR

VCR is a challenging multiple-choice VQA dataset that needs commonsense knowledge to understand the visual scenes and requires multiple-steps reasoning to answer the question..

Evaluation data: 500 (val)

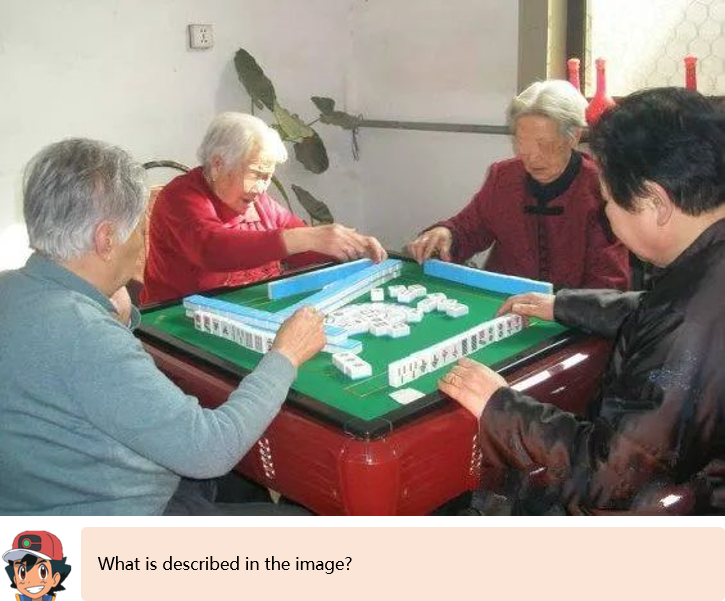

Object Hallucination

LVLM suffers from the object hallucination problem, i.e., the generated results are inconsistent with the target images in the descriptions. Evaluating object hallucination for different LVLMs help understand their respective weaknesses. To this end, we evaluate the object hallucination problem of LVLMs on the MSCOCO dataset under POPE pipeline.

Object Hallucination

COCO-Random

We randomly select 500 images from the validation set of MSCOCO with more than three ground-truth objects in the annotations and construct 6 questions for each image. The probing objects in the questions that do not exist in the image are randomly sampled.

Evaluation data: 3000(val)

MSCOCO-Popular

Similar to COCO-Random, we randomly select 500 images and construct 6 questions for each image. But the probing objects in the questions that do not exist in the image are selected from the top-50% most frequent objects in MSCOCO.

Evaluation data: 3000(val)

MSCOCO-Adversarial

Similar to COCO-Random, we randomly select 500 images and construct 6 questions for each image. But the probing objects in the questions that do not exist in the image are selected from the ranked objects with their co-occurring frequency and the top-50% most frequent objects are sampled.

Evaluation data: 3000(val)

COCO-Random

We randomly select 500 images from the validation set of MSCOCO with more than three ground-truth objects in the annotations and construct 6 questions for each image. The probing objects in the questions that do not exist in the image are randomly sampled.

Evaluation data: 3000(val)

MSCOCO-Popular

Similar to COCO-Random, we randomly select 500 images and construct 6 questions for each image. But the probing objects in the questions that do not exist in the image are selected from the top-50% most frequent objects in MSCOCO.

Evaluation data: 3000(val)

MSCOCO-Adversarial

Similar to COCO-Random, we randomly select 500 images and construct 6 questions for each image. But the probing objects in the questions that do not exist in the image are selected from the ranked objects with their co-occurring frequency and the top-50% most frequent objects are sampled.

Evaluation data: 3000(val)

Embodied Intelligence

Embodied intelligence aims to create agents, such as robots, which learn to solve challenging tasks requiring environmental interaction. Recently, LLM and LVLM exhibited exceptional effectiveness in guiding the agent to complete a series of tasks. In this evaluation, we utilize high-level tasks in EmbodiedGPT and employ Minecraft, VirtualHome, Meta-World, and Franks Kitchen as benchmarks.

Embodied AI Tasks

Minecraft

Evaluation data: Selected sample

VirtualHome

Evaluation data: Selected sample

Meta-World

Evaluation data: Selected sample

Franka Kitchen

Evaluation data: Selected sample

Minecraft

Evaluation data: Selected sample

VirtualHome

Evaluation data: Selected sample

Meta-World

Evaluation data: Selected sample

Franka Kitchen

Evaluation data: Selected sample